This site is maintained and updated by Sadman Kabir Soumik

[Most of the contents in this page are collected from different blogs, videos, books written/made by other people. References are given with each section.]

Overfitting vs Underfitting

Overfitting happens when your model is too complex. For example, if you are training a deep neural network with a very small dataset like dozens of samples, then there is a high chance that your model is going to overfit.

Underfitting happens when your model is too simple. For example, if your linear regression model trying to learn from a very large data set with hundreds of features.

Few solutions for model overfit:

- Reduce the network’s capacity by removing layers or reducing the number of elements in the hidden layers.

- Apply regularization, which comes down to adding a cost to the loss function for large weights.

- Use Dropout layers, which will randomly remove certain features by setting them to zero.

Bias-Variance Trade-off

Bias is error due to wrong or overly simplistic assumptions in the learning algorithm you’re using. This can lead to the model underfitting your data, making it hard for it to have high predictive accuracy and for you to generalize your knowledge from the training set to the test set.

Variance is error due to too much complexity in the learning algorithm you’re using. This leads to the algorithm being highly sensitive to high degrees of variation in your training data, which can lead your model to overfit the data. You’ll be carrying too much noise from your training data for your model to be very useful for your test data.

The bias-variance decomposition essentially decomposes the learning error from any algorithm by adding the bias, the variance and a bit of irreducible error due to noise in the underlying dataset. Essentially, if you make the model more complex and add more variables, you’ll lose bias but gain some variance — in order to get the optimally reduced amount of error, you’ll have to tradeoff bias and variance. You don’t want either high bias or high variance in your model.

- Too simple model -> model underfit -> Bias

- Too complex model -> model overfit -> Variance

ML System Design

Books

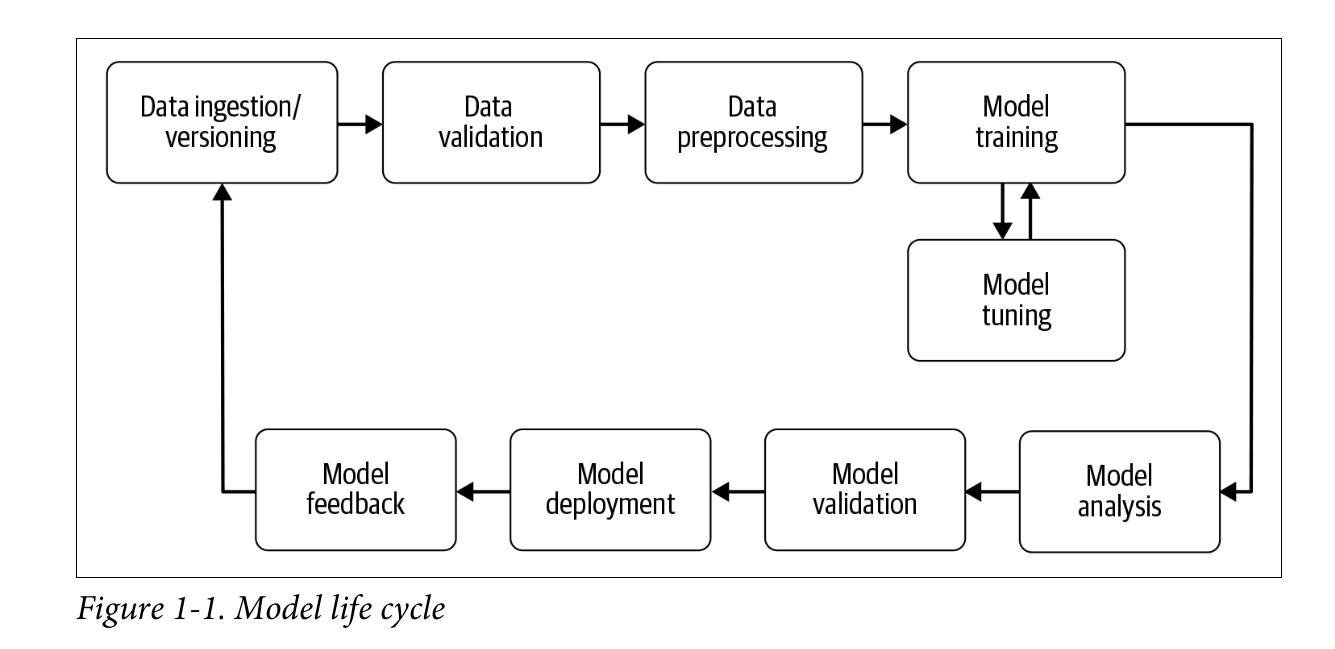

Designing Machine Learning Systems by Chip Huyen

Building Machine Learning Pipelines - Automating Model Life Cycles with TensorFlow by Hannes Hapke & Catherine Nelson

Parameter vs Hyperparameter

Parameters are estimated or learned from data. They are not manually set by the practitioners. For example, model weights in ANN.

Hyperparameters are set/specified by the practitioners. They are often tuned for a given predictive modeling problem. For example,

- The K in the K-nearest neighbors

- Learning rate

- Batch size

- Number of epochs

If your training data classification accuracy is 80% and test data accuracy is 60% what will you do?

So, we have a overfit model. To prevent overfitting, we can take the following steps:

- Your model needs be improved (change parameters)

- You may need to try a different machine learning algorithm (not all algorithms created equal)

- You need more data (subtle relationship difficult to find), train with more data.

- Reduce the network size (remove layers).

- Use k-fold cross validation.

- Add weight regularization with cost function (L1 and L2 regularization).

- Remove irrelevant features.

- Add dropout.

- Apply data augmentation.

- Use early stopping.

Test accuracy is higher than the train accuracy

It’s not normal at all. Possible reasons:

- The model is underfitting and representing neither the train nor the test data.

- There is a possibility of data leakage. It can happen due to peek at the test dataset as you go.

- Do you see very high accuracy? Then perhaps, you might have included a variable similar to dependent variable in the features.

- Give a try changing random state.

- Is it a time series data? Does the test data come from the same population distribution?

Ref: Kaggle

What is Data Leakage in ML

Data leakage can cause you to create overly optimistic if not completely invalid predictive models.

Data leakage is when information from outside the training dataset is used to create the model. This additional information can allow the model to learn or know something that it otherwise would not know and in turn invalidate the estimated performance of the mode being constructed.

-

if any other feature whose value would not actually be available in practice at the time you’d want to use the model to make a prediction, is a feature that can introduce leakage to your model

-

when the data you are using to train a machine learning algorithm happens to have the information you are trying to predict

Data is one of the most critical factors for any technology. Similarly, data plays a vital role in developing intelligent machines and systems in machine learning and artificial intelligence. In Machine Learning, when we train a model, the model aims to perform well and give high prediction accuracy. However, imagine the situation where the model is performing exceptionally well. In contrast, testing, but when it is deployed for the actual project, or it is given accurate data, it performs poorly. So, this problem mainly occurs due to Data Leakage.

A scenario when ML model already has information of test data in training data, but this information would not be available at the time of prediction, called data leakage. It causes high performance while training set, but perform poorly in deployment or production.

Data leakage generally occurs when the training data is overlapped with testing data during the development process of ML models by sharing information between both data sets. Ideally, there should not be any interaction between these data sets (training and test sets).

Ref: javapoint

Best deep CNN architectures and their principles: from AlexNet to EfficientNet

Read from theaisummer

How to improve the accuracy of image recognition models

-

Get more data, improve the quality of data. e.g examine the dataset, and remove bad images. You may consider increasing the diversity of your available dataset by employing data augmentation.

-

Adding more layers to your model increases its ability to learn your dataset’s features more deeply. This means that it will be able to recognize subtle differences that you, as a human, might not have picked up on.

-

Change the image size. If you choose an image size that is too small, your model will not be able to pick up on the distinctive features that help with image recognition. Conversely, if your images are too big, it increases the computational resources required by your computer and/or your model might not be sophisticated enough to process them.

-

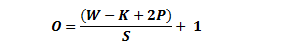

Increase the epochs. Epochs are basically how many times you pass the entire dataset through the neural network. Incrementally train your model with more epochs with intervals of +25, +100, and so on.

Increasing epochs makes sense only if you have a lot of data in your dataset. However, your model will eventually reach a point where increasing epochs will not improve accuracy.

-

Decrease Colour Channels. Colour channels reflect the dimensionality of your image arrays. Most colour (RGB) images are composed of three colour channels, while grayscale images have just one channel.

The more complex the colour channels are, the more complex the dataset is and the longer it will take to train the model.

If colour is not such a significant factor in your model, you can go ahead and convert your colour images to grayscale.

What is gradient descent?

Gradient descent is an optimization algorithm used to find the values of parameters (coefficients) of a function (f) that minimizes a cost function (cost).

Gradient descent is best used when the parameters cannot be calculated analytically (e.g. using linear algebra) and must be searched for by an optimization algorithm.

Data standardization vs Normalization

Normalization typically means rescales the values into a range of [0,1].

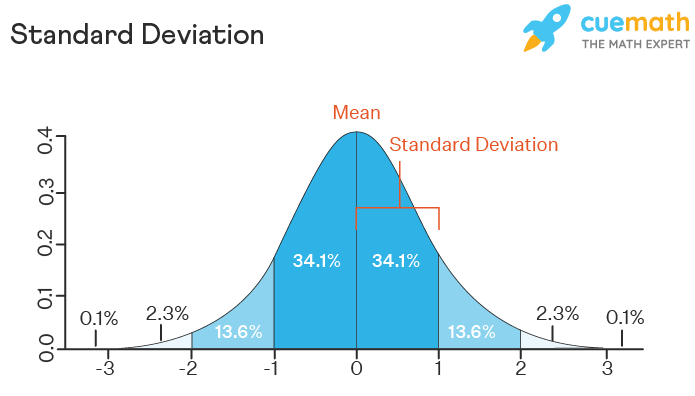

Standardization: typically means rescales data to have a mean of 0 and a standard deviation of 1 (unit variance).

Why do we normalize data

For machine learning, every dataset does not require normalization. It is required only when features have different ranges.

For example, consider a data set containing two features, age(x1), and income(x2). Where age ranges from 0–100, while income ranges from 0–20,000 and higher. Income is about 1,000 times larger than age and ranges from 20,000–500,000. So, these two features are in very different ranges. When we do further analysis, like multivariate linear regression, for example, the attributed income will intrinsically influence the result more due to its larger value. But this doesn’t necessarily mean it is more important as a predictor.

Because different features do not have similar ranges of values and hence gradients may end up taking a long time and can oscillate back and forth and take a long time before it can finally find its way to the global/local minimum. To overcome the model learning problem, we normalize the data. We make sure that the different features take on similar ranges of values so that gradient descents can converge more quickly.

When Should You Use Normalization And Standardization

Normalization is a good technique to use when you do not know the distribution of your data or when you know the distribution is not Gaussian (a bell curve). Normalization is useful when your data has varying scales and the algorithm you are using does not make assumptions about the distribution of your data, such as k-nearest neighbors and artificial neural networks.

Standardization assumes that your data has a Gaussian (bell curve) distribution. This does not strictly have to be true, but the technique is more effective if your attribute distribution is Gaussian. Standardization is useful when your data has varying scales and the algorithm you are using does make assumptions about your data having a Gaussian distribution, such as linear regression, logistic regression, and linear discriminant analysis.

Normalization -> Data distribution is not Gaussian (bell curve). Typically applies in KNN, ANN

Standardization -> Data distribution is Gaussian (bell curve). Typically applies in Linear regression, logistic regression.

Note: Algorithms like Random Forest (any tree based algorithm) does not require feature scaling.

How to determine the optimal threshold for a classifier and generate ROC curve?

The choice of a threshold depends on the importance of TPR and FPR classification problem. For example, if your classifier will decide which criminal suspects will receive a death sentence, false positives are very bad (innocents will be killed!). Thus you would choose a threshold that yields a low FPR while keeping a reasonable TPR (so you actually catch some true criminals). If there is no external concern about low TPR or high FPR, one option is to weight them equally by choosing the threshold that maximizes TPR − FPR.

Few Unsupervised Learning Algorithms

- K-means clustering.

- PCA

- ICA

- Apriori

Semi-supervised and self-supervised methods

- Auto-encoder

Anomaly detection algorithms

- Auto-encoder

- SVM

- KNN (Visualization)

- DBScan

- Bayesian Networks

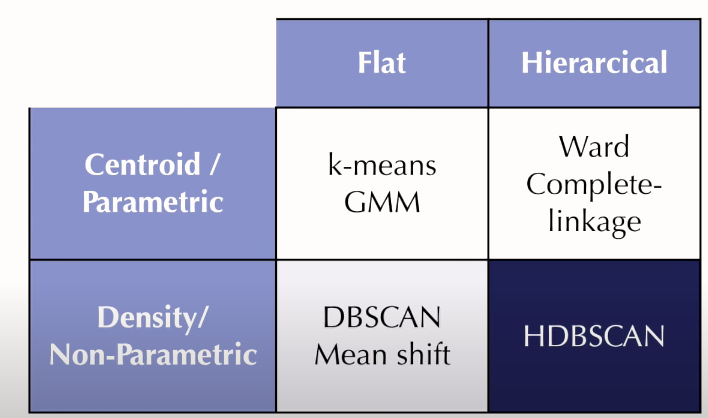

Clustering Algorithms

- K-means

- KNN

- PCA

- SVD

How PCA works?

PCA helps us to identify patterns in data based on the correlation between features. In a nutshell, PCA aims to find the directions of maximum variance in high-dimensional data and projects it onto a new subspace with equal or fewer dimensions than the original one.

Imputation for Missing Data

-

Replace missing value with the mean of other observations from the same feature.

-

If there are outliers in the data, we can replace the missing data with median of the feature.

-

Better way would be to use KNN to find the similar observations/samples, and then replace missing values with their (similar samples) average.

KNN works better for numerical data.

-

For Categorical data, we can do Random forest/ ANN to predict the missing values.

What does standard deviation tell you?

A standard deviation (or σ) is a measure of how dispersed the data is in relation to the mean. Low standard deviation means data are clustered around the mean, and high standard deviation indicates data are more spread out.

How to calculate std:

| = | population standard deviation | |

|---|---|---|

| = | the size of the population | |

| = | each value from the population | |

| = | the population mean |

Ref: Wikipedia

Hypothesis testing using P-value

P value is the probability for the null hypothesis to be true.

P-values are used in hypothesis testing to help decide whether to reject the null hypothesis. The smaller the p-value, the more likely you are to reject the null hypothesis.

Null hypothesis: An assumption that treats everything same or equal. Let’s say, I have made an assumption that global GDP would be same before and after the covid pandemic, and that’s my null hypothesis. Now, using the GDP data, we can find the p-value and justify our null hypothesis.

Steps:

- Collect data

- Define significance level; many cases it’s 0.05

- Run some statistical test (given below).

Now, let’s say, we have run the test on 100 countries and out p value is 0.05, it means our null hypothesis would be true for only 5 countries.

Standard industry standard significance levels are:

- 0.01 < p_value: very strong evidence against null hypothesis.

- 0.01 <= p_value < 0.05 : strong evidence against null hypothesis.

- 0.05 <= p_value < 0.10 : mild evidence against null hypothesis.

- p_value >= 0.10 : accept null hypothesis.

There are different statistical tests for calculating p-value:

- Z-test

- T-Test

- Anova

- Chi-square

P-value and Hypothesis testing

Watch this video as well:

When to use Linear Regression?

Perhaps evident, for linear regression to work, we need to ensure that the relationship between the features and the target variable is linear. If it isn’t, linear regression won’t give us good predictions.

Sometimes, this condition means we have to transform the input features before using linear regression. For example, if you have a variable with an exponential relationship with the target variable, you can use log transform to turn the relationship linear.

Linear regression will overfit your data when you have highly correlated features.

Linear regression requires that your features and target variables are not noisy. The less noise in your data, the better predictions you’ll get from the model. Here is Jason Brownlee in “Linear Regression for Machine Learning”:

Linear regression assumes that your input and output variables are not noisy. Consider using data cleaning operations that let you better expose and clarify the signal in your data.

Ref: https://today.bnomial.com/

How to find if there is any outlier in your data

Outliers are data points that are far from other data points. In other words, they’re unusual values in a dataset. Outliers are problematic for many statistical analyses because they can cause tests to either miss significant findings or distort real results.

You can convert extreme data points into z scores that tell you how many standard deviations away they are from the mean. If a value has a high enough or low enough z score, it can be considered an outlier. As a rule of thumb, values with a z score greater than 3 or less than –3 are often determined to be outliers.

To calculate the Z-score for an observation, take the raw measurement, subtract the mean, and divide by the standard deviation. Mathematically, the formula for that process is the following:

The further away an observation’s Z-score is from zero, the more unusual it is. A standard cut-off value for finding outliers are Z-scores of +/-3 or further from zero.

Ref: statisticsbyjim , scribbr

Loss Funcions

Machines learn by means of a loss function. It’s a method of evaluating how well specific algorithm models the given data. If predictions deviates too much from actual results, loss function would cough up a very large number. Gradually, with the help of some optimization function, loss function learns to reduce the error in prediction.

Vanishing Gradient Problem

As the backpropagation algorithm advances downwards(or backward) from the output layer towards the input layer, the gradients often get smaller and smaller and approach zero which eventually leaves the weights of the initial or lower layers nearly unchanged. As a result, the gradient descent never converges to the optimum. This is known as the *vanishing gradients* problem.

Why?

Certain activation functions, like the sigmoid function, squishes a large input space into a small input space between 0 and 1. Therefore, a large change in the input of the sigmoid function will cause a small change in the output. Hence, the derivative becomes small.

However, when n hidden layers use an activation like the sigmoid function, n small derivatives are multiplied together. Thus, the gradient decreases exponentially as we propagate down to the initial layers.

Solution

-

Use non-saturating activation function: because of the nature of sigmoid activation function, it starts saturating for larger inputs (negative or positive) came out to be a major reason behind the vanishing of gradients thus making it non-recommendable to use in the hidden layers of the network.

So to tackle the issue regarding the saturation of activation functions like sigmoid and tanh, we must use some other non-saturating functions like ReLu and its alternatives.

-

Proper weight initialization: There are different ways to initialize weights, for example, Xavier/Glorot initialization, Kaiming initializer etc. Keras API has default weight initializer for each types of layers. For example, see the available initializers for tf.keras in keras doc.

You can get the weights of a layer like below:

# tf.keras

model.layers[1].get_weights()

- Residual networks are another solution, as they provide residual connections straight to earlier layers.

- Use smaller learning rate.

- Batch normalization (BN) layers can also resolve the issue. As stated before, the problem arises when a large input space is mapped to a small one, causing the derivatives to disappear. Batch normalization reduces this problem by simply normalizing the input, so it doesn’t reach the outer edges of the sigmoid function.

# tf.keras

from keras.layers.normalization import BatchNormalization

# instantiate model

model = Sequential()

# The general use case is to use BN between the linear and non-linear layers in your network,

# because it normalizes the input to your activation function,

# though, it has some considerable debate about whether BN should be applied before

# non-linearity of current layer or works best after the activation function.

model.add(Dense(64, input_dim=14, init='uniform')) # linear layer

model.add(BatchNormalization()) # BN

model.add(Activation('tanh')) # non-linear layer

Batch normalization applies a transformation that maintains the mean output close to 0 and the output standard deviation close to 1.

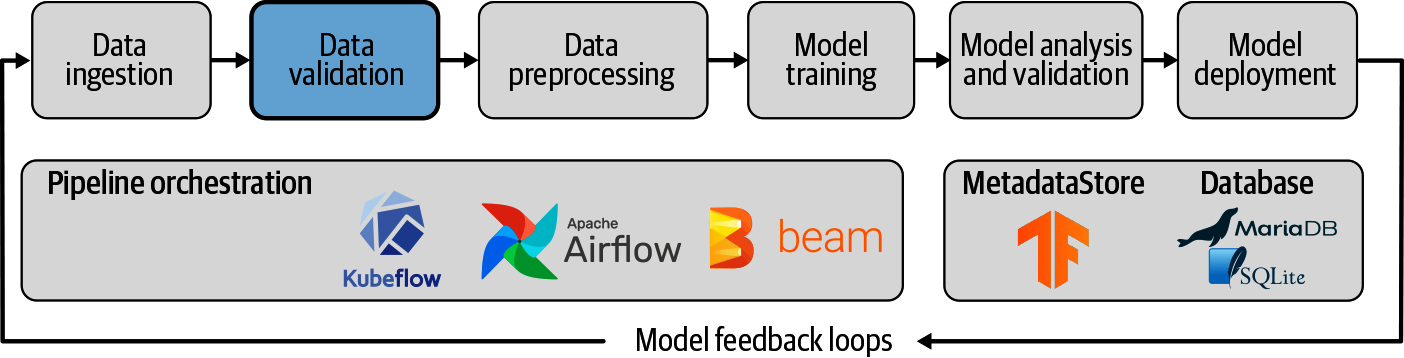

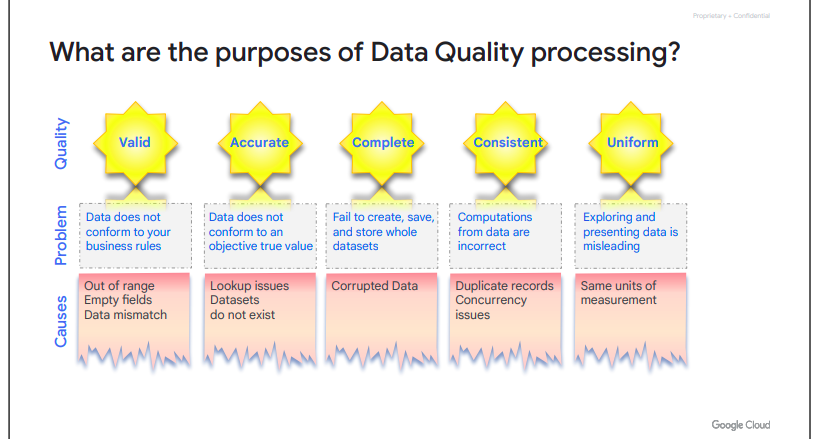

Data Validation for ML Pipeline

image source: Book: Building Machine Learning Pipelines by Hannes Hapke, Catherine Nelson

If our goal is to automate our machine learning model updates, validating our data is essential. In particular, when we say validating, we mean three distinct checks on our data:

- Check for data anomalies.

- Check that the data schema hasn’t changed.

- Check that the statistics of our new datasets still align with statistics from our previous training datasets.

The data validation step in our pipeline performs these checks and highlights any failures. If a failure is detected, we can stop the workflow and address the data issue by hand, for example, by curating a new dataset.

In a world where datasets continuously grow, data validation is crucial to make sure that our machine learning models are still up to the task. Because we can compare schemas, we can quickly detect if the data structure in newly obtained datasets has changed (e.g., when a feature is deprecated). It can also detect if your data starts to drift. This means that your newly collected data has different underlying statistics than the initial dataset used to train your model. This drift could mean that new features need to be selected or that the data preprocessing steps need to be updated (e.g., if the minimum or maximum of a numerical column changes). Drift can happen for a number of reasons: an underlying trend in the data, seasonality of the data, or as a result of a feedback loop.

The TensorFlow ecosystem offers a tool that can assist you in data validation, TFDV. It is part of the TFX project. TFDV allows you to perform the kind of analyses we discussed previously (e.g., generating schemas and validating new data against an existing schema). It also offers visualizations.

TFDV accepts two input formats to start the data validation: TensorFlow’s TFRecord and CSV files. In common with other TFX components, it distributes the analysis using Apache Beam.

For numerical features, TFDV computes for every feature:

- The overall count of data records

- The number of missing data records

- The mean and standard deviation of the feature across the data records

- The minimum and maximum value of the feature across the data records

- The percentage of zero values of the feature across the data records

In addition, it generates a histogram of the values for each feature.

For categorical features, TFDV provides:

- The overall count of data records

- The percentage of missing data records

- The number of unique records

- The average string length of all records of a feature

- For each category, TFDV determines the sample count for each label and its rank

Read more: Building Machine Learning Pipelines by Hannes Hapke, Catherine Nelson

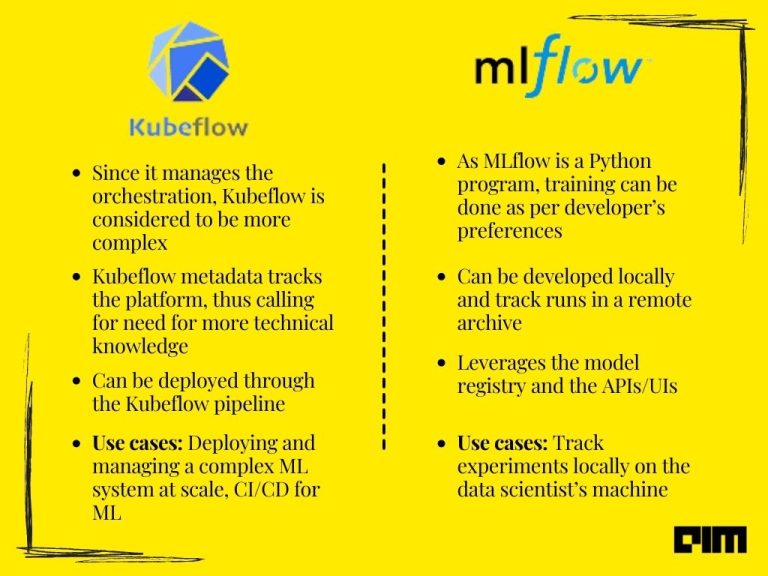

Automated Model Retraining with Kubeflow Pipelines

Read here

Why ReLU

ReLu is faster to compute than the sigmoid function, and its derivative is faster to compute. This makes a significant difference to training and inference time for neural networks.

Main benefit is that the derivative/gradient of ReLu is either 0 or 1, so multiplying by it won’t cause weights that are further away from the end result of the loss function to suffer from the vanishing gradient.

Benefits of Batch Normalization

the input values for the hidden layer are normalized

The idea is that, instead of just normalizing the inputs to the network, we normalize the inputs to layers within the network. It’s called “batch” normalization because during training, we normalize each layer’s inputs by using the mean and variance of the values in the current mini-batch (usually zero mean and unit variance).

- Networks train faster — Each training iteration will actually be slower because of the extra calculations during the forward pass and the additional hyperparameters to train during back propagation. However, it should converge much more quickly, so training should be faster overall.

- Allows higher learning rates — Gradient descent usually requires small learning rates for the network to converge. And as networks get deeper, their gradients get smaller during back propagation so they require even more iterations. Using batch normalization allows us to use much higher learning rates, which further increases the speed at which networks train.

What is weight decay

Having fewer parameters is only one way of preventing our model from getting overly complex. But it is actually a very limiting strategy. More parameters mean more interactions between various parts of our neural network. And more interactions mean more non-linearities. These non-linearities help us solve complex problems.

However, we don’t want these interactions to get out of hand. Hence, what if we penalize complexity. We will still use a lot of parameters, but we will prevent our model from getting too complex. This is how the idea of weight decay came up.

One way to penalize complexity, would be to add all our parameters (weights) to our loss function. Well, that won’t quite work because some parameters are positive and some are negative. So what if we add the squares of all the parameters to our loss function. We can do that, however it might result in our loss getting so huge that the best model would be to set all the parameters to 0.

To prevent that from happening, we multiply the sum of squares with another smaller number. This number is called *weight decay* or wd.

Our loss function now looks as follows:

Loss = MSE(y_hat, y) + wd * sum(w^2)

How to use Keras Pretrained models

Ref: Medium

Type 1 error vs type 2 error

You decide to get tested for COVID-19 based on mild symptoms. There are two errors that could potentially occur:

| Error Name | Example |

|---|---|

| Type 1 Error (FP) | The test result says you have corona-virus, but you actually don’t. |

| Type 2 Error (FN) | The test result says you don’t have corona-virus, but you actually do. |

Ref: scribbr

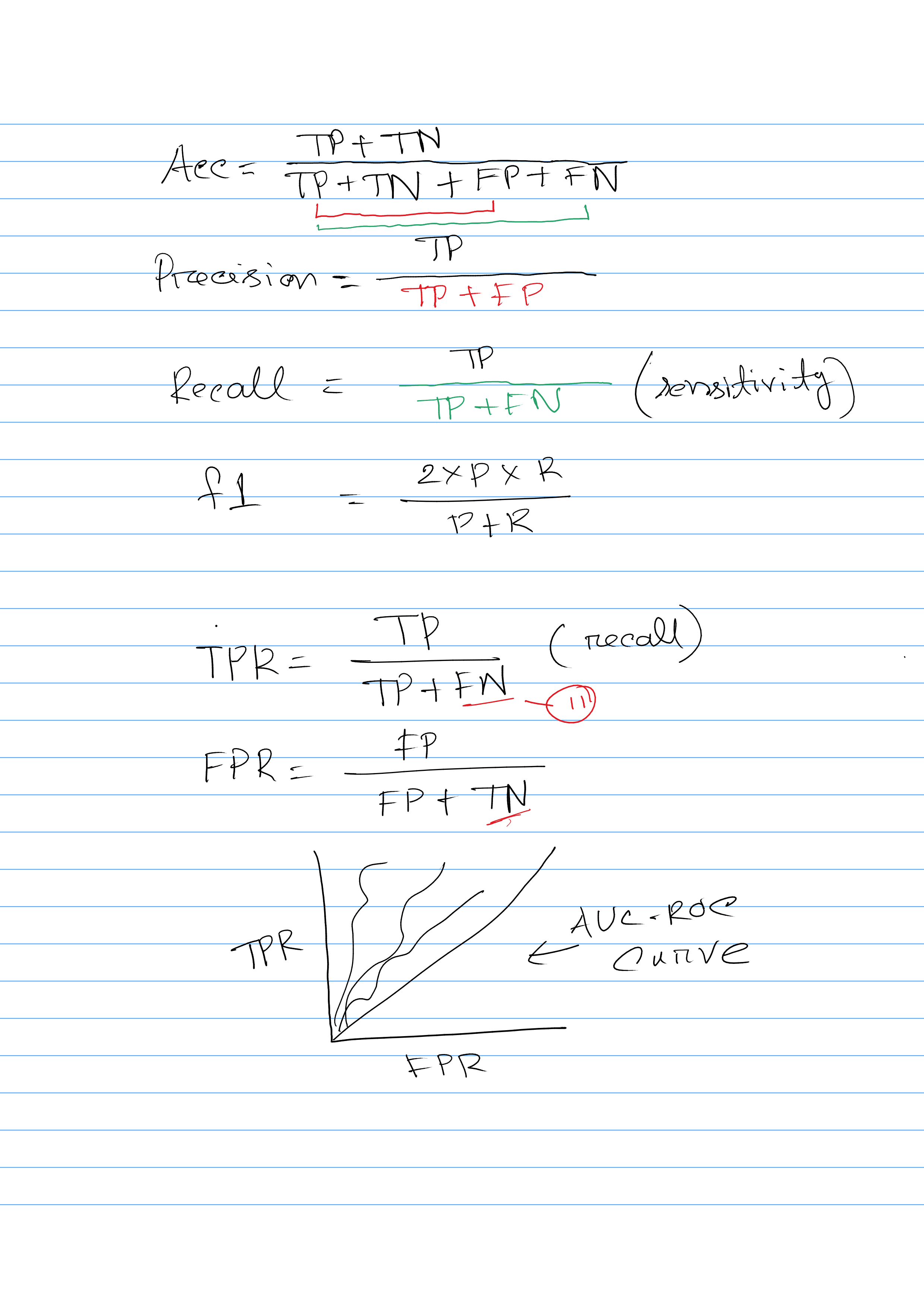

Confusion Matrix

Let’s say, we have a dataset which contains cancer patient data (Chest X-ray image), and we have built a machine learning model to predict if a patient has cancer or not.

True positive (TP): Given an image, if your model predicts the patient has cancer, and the actual target for that patient has also cancer, it is considered a true positive. Means the prediction is True.

True negative (TN): Given an image, if your model predicts that the patient does not have cancer and the actual target also says that patient doesn’t have cancer it is considered a true negative. Means the prediction is True.

False positive (FP): Given an image, if your model predicts that the patient has cancer but the the actual target for that image says that the patient doesn’t have cancer, it a false positive. Means the model prediction is False.

False negative (FN): Given an image, if your model predicts that the patient doesn’t have cancer but the actual target for that image says that the patient has cancer, it is a false negative. This prediction is also false.

Difference Evaluation Metric calculation

AUC-ROC:

AUC-ROC:

AUC - ROC curve is a performance measurement for the classification problems at various threshold settings. ROC is a probability curve and AUC represents the degree or measure of separability. It tells how much the model is capable of distinguishing between classes. Higher the AUC, the better the model is at predicting 0 classes as 0 and 1 classes as 1. By analogy, the Higher the AUC, the better the model.

**I would recommend using AUC over accuracy as it’s a much better indicator of model performance. This is due to AUC using the relationship between True Positive Rate and False Positive Rate to calculate the metric. If you are wanting to use accuracy as a metric, then I would encourage you to track other metrics as well, such as AUC or F1. Ref

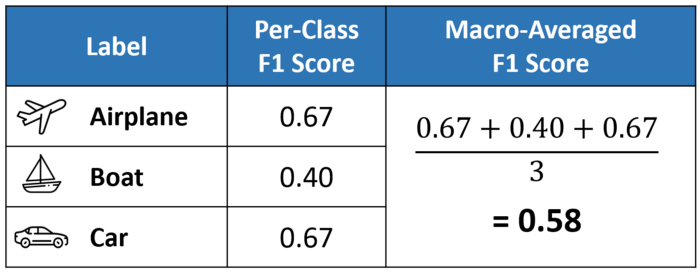

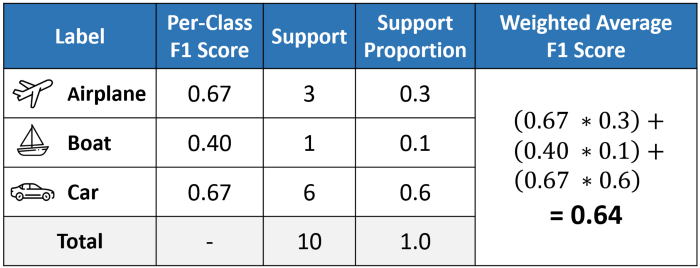

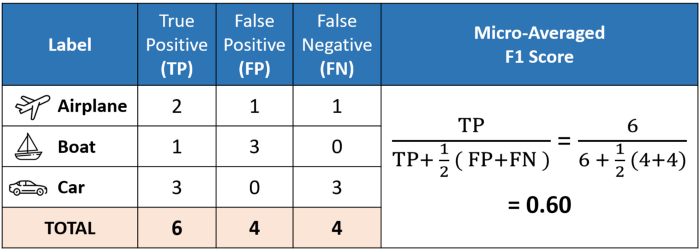

Difference among micro, macro, weighted f1-score

Excellent explanation: medium

Ref: Leung

When not to use accuracy as Metric

If the number of samples in one class outnumber the number of samples in another class by a lot. In these kinds of cases, it is not advisable to use accuracy as an evaluation metric as it is not representative of the data. So, you might get high accuracy, but your model will probably not perform that well when it comes to real-world samples, and you won’t be able to explain to your managers why. In these cases, it’s better to look at other metrics such as precision.

Which performance metrics for highly imbalanced multi class classification?

I would suggest to go with -

-

Weighted F1-Score or

-

Average AUC/Weighted AUC.

Common Evaluation Metrics in ML

If we talk about classification problems, the most common metrics used are:

-

Accuracy

- Precision (P)

- Recall (R)

- F1 score (F1)

- Area under the ROC (Receiver Operating Characteristic) curve or simply AUC (AUC)

- Log loss- Precision at k (P@k)

- Average precision at k (AP@k)

- Mean average precision at k (MAP@k)

When it comes to regression, the most commonly used evaluation metrics are:

-

Mean absolute error (MAE)

-

Mean squared error (MSE)

-

Root mean squared error (RMSE)

-

Root mean squared logarithmic error (RMSLE)

-

Mean percentage error (MPE)

-

Mean absolute percentage error (MAPE)- R2

MSE vs MAE vs RMSE

So a robust system or metric must be less affected by outliers. In this scenario it is easy to conclude that MSE may be less robust than MAE, since the squaring of the errors will enforce a higher importance on outliers.

If there is outlier in the data, and you want to ignore them, MAE is a better option. If you want to account for them in your loss function, go for MSE/RMSE.

MSE is highly biased for higher values. RMSE is better in terms of reflecting performance when dealing with large error values.

Deciding whether to use precision or recall or f1:

It is mathematically impossible to increase both precision and recall at the same time, as both are inversely proportional to each other.. Depending on the problem at hand we decide which of them is more important to us.

We will first need to decide whether it’s important to avoid false positives or false negatives for our problem. Precision is used as a metric when our objective is to minimize false positives and recall is used when the objective is to minimize false negatives. We optimize our model performance on the selected metric.

Below are a couple of cases for using precision/recall/f1.

-

An AI is leading an operation for finding criminals hiding in a housing society. The goal should be to arrest only criminals, since arresting innocent citizens can mean that an innocent can face injustice. However, if the criminal manages to escape, there can be multiple chances to arrest him afterward. In this case, false positive(arresting an innocent person) is more damaging than false negative(letting a criminal walk free). Hence, we should select precision in order to minimize false positives.

-

We are all aware of the intense security checks at airports. It is of utmost importance to ensure that people do not carry weapons along them to ensure the safety of all passengers. Sometimes these systems can lead to innocent passengers getting flagged, but it is still a better scenario than letting someone dangerous onto the flight. Each flagged individual is then checked thoroughly once more and innocent people are released. In this case, the emphasis is on ensuring false negatives(people with weapons getting into flights) are avoided during initial scanning, while detected false positives(innocent passengers flagged) are eventually let free. This is a scenario for minimizing false negatives and recall is the ideal measure of how the system has performed.

-

f1-score: Consider a scenario where your model needs to predict if a particular employee has to be promoted or not and promotion is the positive outcome. In this case, promoting an incompetent employee(false positive) and not promoting a deserving candidate(false negative) can both be equally risky for the company.

When avoiding both false positives and false negatives are equally important for our problem, we need a trade-off between precision and recall. This is when we use the f1 score as a metric. An f1 score is defined as the harmonic mean of precision and recall.

ref: analytics vidya

When to use F1 as a evaluation metric?

Accuracy is used when the True Positives and True negatives are more important while F1-score is used when the False Negatives and False Positives are crucial. Accuracy can be used when the class distribution is similar while F1-score is a better metric when there are imbalanced classes .

Rank aware evaluation Metrics for recommender systems

3 most popular rank-aware metrics available to evaluate recommendation systems:

- MRR: Mean Reciprocal Rank

- MAP: Mean Average Precision

- NDCG: Normalized Discounted Cumulative Gain

Recommender systems have a very particular and primary concern. They need to be able to put relevant items very high up the list of recommendations. Most probably, the users will not scroll through 200 items to find their favorite brand of earl grey tea. We need rank-aware metrics to select recommenders that aim at these two primary goals:

MRR: Mean Reciprocal Rank

This is the simplest metric of the three. It tries to measure “Where is the first relevant item?”.

MRR Pros

- This method is simple to compute and is easy to interpret.

- This method puts a high focus on the first relevant element of the list. It is best suited for targeted searches such as users asking for the “best item for me”.

- Good for known-item search such as navigational queries or looking for a fact.

MRR Cons

- The MRR metric does not evaluate the rest of the list of recommended items. It focuses on a single item from the list.

- It gives a list with a single relevant item just a much weight as a list with many relevant items. It is fine if that is the target of the evaluation.

- This might not be a good evaluation metric for users that want a list of related items to browse. The goal of the users might be to compare multiple related items.

MAP: Average Precision and Mean Average Precision

We want to evaluate the whole list of recommended items up to a specific cut-off N. The drawback of this metric is that it does not consider the recommended list as an ordered list.

MAP Pros

- Gives a single metric that represents the complex Area under the Precision-Recall curve. This provides the average precision per list.

- Handles the ranking of lists recommended items naturally. This is in contrast to metrics that considering the retrieved items as sets.

Calculate document similarity

Some of the most common and effective ways of calculating similarities are,

Cosine Distance/Similarity - It is the cosine of the angle between two vectors, which gives us the angular distance between the vectors. Formula to calculate cosine similarity between two vectors A and B is,

:extract_focal()/https%3A%2F%2Fmiro.medium.com%2Fmax%2F1144%2F1*YInqm5R0ZgokYXjNjE3MlQ.png)

In a two-dimensional space it will look like this,

:extract_focal()/https%3A%2F%2Fmiro.medium.com%2Fmax%2F1144%2F1*mRjgETrg-mPt8jMBu1VtDg.png)

Euclidean Distance - This is one of the forms of Minkowski distance when p=2. It is defined as follows,

:extract_focal()/https%3A%2F%2Fmiro.medium.com%2Fmax%2F742%2F0*55jbZL3qTdeEI5gL.png)

In two-dimensional space, Euclidean distance will look like this,

:extract_focal()/https%3A%2F%2Fmiro.medium.com%2Fmax%2F1144%2F1*aUFcVBD_dBAAayDFfAmo_A.png)

Fig2: Euclidean distance between two vectors A and B in 2-dimensional space

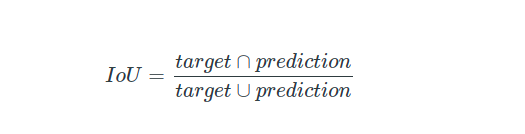

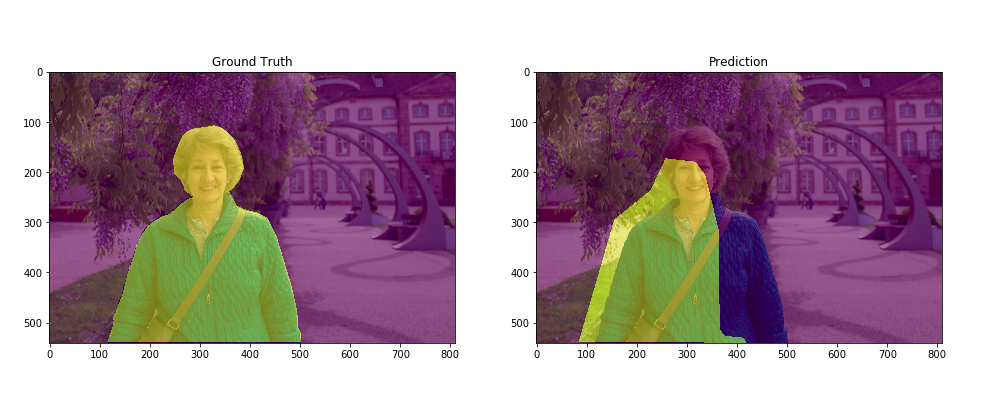

IoU: Intersection over Union Metric

The Intersection over Union (IoU) metric, also referred to as the Jaccard index, is essentially a method to quantify the percent overlap between the target mask and prediction output. This metric is closely related to the Dice coefficient which is often used as a loss function during training.

We can calculate this easily using Numpy.

intersection = np.logical_and(target, prediction)

union = np.logical_or(target, prediction)

iou_score = np.sum(intersection) / np.sum(union)

The IoU score is calculated for each class separately and then averaged over all classes to provide a global score.

Example Data:

Read more on Semantic/Instance segmentation evaluation here

Association Rule Learning

Ref : Saul Dobilas

Clustering is not the only unsupervised way to find similarities between data points. You can also use association rule learning techniques to determine if certain data points (actions) are more likely to occur together.

A simple example would be the supermarket shopping basket analysis. If someone is buying ground beef, does it make them more likely to also buy spaghetti? We can answer these types of questions by using the Apriori algorithm.

Apriori is part of the association rule learning algorithms, which sit under the unsupervised branch of Machine Learning.

This is because Apriori does not require us to provide a target variable for the model. Instead, the algorithm identifies relationships between data points subject to our specified constraints.

Let’s say, we have a dataset like this:

Assume we analyze the above transaction data to find frequently bought items and determine if they are often purchased together. To help us find the answers, we will make use of the following 4 metrics:

- Support

- Confidence

- Lift

Calculate Support

The first step for us and the algorithm is to find frequently bought items. It is a straightforward calculation that is based on frequency:

Support (Item) = Transaction of that Item / Total transactions

Support (Eggs) = 3 / 6 # 6 because there are shoppers 1 to 6

= 0.5

Support (Bacon) = 4 / 6

= 0.66

Support (Apple) = 2 / 6

= 0.33

Support (Eggs & Bacon) = 3 / 6 # 3 because there are 3 times Eggs and Becons were bought together

= 0.5

Support (Banana & Butter) = 1 / 6

= 0.16

Calculate Confidence

Now that we have identified frequently bought items let’s calculate confidence. This will tell us how confident (based on our data) we can be that an item will be purchased, given that another item has been purchased.

Confidence = conditional probability

Confidence (Eggs -> Bacon) = Support(Eggs & Bacon) / Support(Eggs)

= 0.5 / 0.5

= 1 (100%)

Confidence (Bacon -> Eggs) = Support(Bacon & Eggs) / Support(Bacon)

= 0.5 / 0.66

= 0.75

Calculate Lift

Given that different items are bought at different frequencies, how do we know that eggs and bacon really do have a strong association, and how do we measure it? You will be glad to hear that we have a way to evaluate this objectively using lift.

Lift(Eggs -> Bacon) = Confidence(Eggs -> Bacon) / Support(Bacon)

= 1 / 0.66

= 1.51

Lift(Bacon -> Eggs) = Confidence(Bacon -> Eggs) / Support(Eggs)

= 0.75 / 0.5

= 1.5

Note,

- lift>1 means that the two items are more likely to be bought together;

- lift<1 means that the two items are more likely to be bought separately;

- lift=1 means that there is no association between the two items.

Compare two images and find the difference between them

The difference between the two images can be measured using Mean Squared Error (MSE) and Structural Similarity Index (SSI).

MSE calculation

def mse(image_A, image_B):

# NOTE: the two images must have the same dimension

err = np.sum((image_A.astype("float") - image_B.astype("float")) ** 2)

err /= float(image_A.shape[0] * image_A.shape[1])

# return the MSE, the lower the error, the more "similar"

return err

SSI calculation

from skimage.metrics import structural_similarity as ssim

result = ssim(image_A, image_B)

# SSIM value can vary between -1 and 1, where 1 indicates perfect similarity.

Why your model performance poor?

- Implementation bugs

- Poor feature selection.

- Wrong hyperparameter choises

- Poor data quality, doesn’t represent real world data.

- Train data collection location and model serving location is not same. Drifts

- Dataset construction issues like Not enough data, noisy data, class imbalances, train/test from different distributions.

- Poor outlier handling.

- Wrong performance metric selection, doesn’t meet the business KPI.

- Bias Variance tradeoff.

- Concept drift.

Reasons why your model is performing bad in production than your locally built model performance

Ref: Liran Hasan link

Misalignment between actual business needs and machine learning objectives

Before starting any project, ask your team or your stakeholders: What business problem are we trying to solve? Why do we believe that machine learning is the right path? What is the measurable threshold of business value this project is trying to reach? What does “good enough” look like?

Concept Drift

The training dataset represents reality for the model: it’s one of the reasons that gathering as much data as possible is critical for a well-performing, robust model. Yet, the model is only trained on a snapshot of reality; The world is continuously changing. That means that the concepts the model has learned are changing as time passes by and accordingly, its performance degrades. That’s why it’s essential to be on the lookout for when concept drifts occur in your model and to detect them as soon as possible.

Application interface/ Updates

Often, the ML model will be utilized by applications that are developed entirely by other teams. A common problem that occurs is when these applications are updated/modified and consequently, the data that is sent to the model is no longer what the model expects. All this without the data science team ever knowing about it.

Feature processing bugs

Data goes through many steps of transformation before finally reaching the ML model. Changes to the data processing pipeline, whether a processing job or change of queue/database configuration, could ruin the data and corrupt the model that they are being sent to.

How do I select features for Machine Learning?

Why do we need feature selection?

Removing irrelevant features results in better performance. It gives us an easy understanding of the model. It also produce models that runs faster.

Techniques:

- Remove features that has high percentage of missing values.

- Drop variables/features that have a very low variation. Either standardize all variables or do standard deviation and find features with zero variation. Drop features with zero variation.

- Pairwise correlation: If two features are highly correlated, keeping only one will reduce dimensionality without much loss in information. Which variable to keep? The one that has higher correlation coefficient with the target.

- Drop variables that have a very low correlation with the target.

Also read here

Scikit-learn methods to select features

Removing features with low variance

from sklearn.feature_selection import VarianceThreshold

A feature with a higher variance means that the value within that feature varies or has a high cardinality. On the other hand, lower variance means the value within the feature is similar, and zero variance means you have a feature with the same value.

Intuitively, you want to have a varied features. That is why we could select the feature based on the variance we select previously.

A variance Threshold is a simple approach to eliminating features based on our expected variance within each feature. Although, there are some down-side to the Variance Threshold method. The Variance Threshold feature selection only sees the input features (X) without considering any information from the dependent variable (y). It is only useful for eliminating features for Unsupervised Modelling rather than Supervised Modelling.

Variance Threshold only useful when we consider the feature selection for Unsupervised Learning.

Univariate feature selection

from sklearn.feature_selection import SelectKBest, chi2

X_new = SelectKBest(chi2, k=5).fit_transform(X, y)

Univariate feature selection works by selecting the best features based on univariate statistical tests. It can be seen as a preprocessing step to an estimator. Univariate Feature Selection is a feature selection method based on the univariate statistical test, e,g: chi2, Pearson-correlation, and many more.

Recursive Feature Elimination (RFE)

Recursive Feature Elimination or RFE is a Feature Selection method utilizing a machine learning model to selecting the features by eliminating the least important feature after recursively training.

First, the estimator is trained on the initial set of features and the importance of each feature is obtained either through any specific attribute (such as coef_, feature_importances_) or callable. Then, the least important features are pruned from current set of features.

Watch this video from Data School: https://youtu.be/YaKMeAlHgqQ

TensorFlow interview questions

- https://www.mlstack.cafe/blog/tensorflow-interview-questions

- https://www.vskills.in/interview-questions/deep-learning-with-keras-interview-questions

Differences between Linear Regression and Logistic regression

| Linear Regression | Logistic Regression |

|---|---|

| Linear regression is used to predict the continuous dependent variable; regression algorithm. | Logistic Regression is used to predict the categorical dependent variable; *classification* algorithm. |

| Loss Function: is uses MSE/MAE/RMSE to calculate errors. | Loss Function: log loss is used to calculate errors. |

| Outputs numeric values. | Sigmoid activation is used in the output to squash the values in the range of 0-1. Then we use a threshold for classification. |

| To perform Linear regression we require a linear relationship between the dependent and independent variables. | To perform Logistic regression we do not require a linear relationship between the dependent and independent variables. |

| Linear regression assumes Gaussian (or normal) distribution of the dependent variable. | Logistic regression assumes the binomial distribution of the dependent variable. |

Why Logistic Regression is called regression?

It is named ‘Logistic Regression’ because its underlying technique is quite the same as Linear Regression. The term “Logistic” is taken from the Logit function that is used in this method of classification.

In linear regression, we predict the output variable Y base on the weighted sum of input variables.

The formula is as follows:

In linear regression, our main aim is to estimate the values of Y-intercept and weights, minimize the cost function, and predict the output variable Y.

In logistic regression, we perform the exact same thing but with one small addition. We pass the result through a special function known as the Sigmoid Function to predict the output Y.

Ref: Medium

Recommender System

Traditionally, recommender systems are based on methods such as clustering, nearest neighbor and matrix factorization.

Collaborative filtering:

Based on past history and what other users with similar profiles preferred in the past.

Content-based:

Based on the content similarity. For example, “related articles”.

Container vs Docker vs Kubernetes

Container: If we can create an environment that we can transfer to other machines (for example: your friend’s computer or a cloud service provider like Google Cloud Platform), we can reproduce the results anywhere. Hence, a container is a type of software that packages up an application and all its dependencies so the application runs reliably from one computing environment to another.

Docker: Docker is a company that provides software (also called Docker) that allows users to build, run and manage containers. While Docker’s container are the most common, there are other less famous alternatives such as LXD and LXC that provides container solution.

Kubernetes: Kubernetes is a powerful open-source system developed by Google back in 2014, for managing containerized applications. In simple words, Kubernetes is a system for running and coordinating containerized applications across a cluster of machines. It is a platform designed to completely manage the life cycle of containerized applications.

Ref: Moez Ali

What are core features of Kubernetes?

Load Balancing: Automatically distributes the load between containers.

Scaling: Automatically scale up or down by adding or removing containers when demand changes such as peak hours, weekends and holidays.

Storage: Keeps storage consistent with multiple instances of an application.

Self-healing: Automatically restarts containers that fail and kills containers that don’t respond to your user-defined health check.

Automated Rollouts: you can automate Kubernetes to create new containers for your deployment, remove existing containers and adopt all of their resources to the new container.

Ref: Moez Ali

Why do you need Kubernetes if you have Docker?

Imagine a scenario where you have to run multiple docker containers on multiple machines to support an enterprise level ML application with varied workloads during day and night. As simple as it may sound, it is a lot of work to do manually.

You need to start the right containers at the right time, figure out how they can talk to each other, handle storage considerations, and deal with failed containers or hardware. This is the problem Kubernetes is solving by allowing large numbers of containers to work together in harmony, reducing the operational burden.

In the lifecycle of any application, Docker is used for packaging the application at the time of deployment, while kubernetes is used for rest of the life for managing the application.

Ref: Moez Ali, original post link: TDS

Main Components of Kubernetes

Watch this video

API Server

The API server is the front end for the Kubernetes control plane. The main implementation of a Kubernetes API server is kube-apiserver. kube-apiserver is designed to scale horizontally—that is, it scales by deploying more instances. You can run several instances of kube-apiserver and balance traffic between those instances.

etcd

Stores all cluster data. key value store.

kube-scheduler

Control plane component that watches for newly created Pods with no assigned node, and selects a node for them to run on.

Factors taken into account for scheduling decisions include: individual and collective resource requirements, hardware/software/policy constraints, affinity and anti-affinity specifications, data locality, inter-workload interference, and deadlines.

kubelet

An agent that runs on each node in the cluster. It makes sure that containers are running in a Pod.

The kubelet takes a set of PodSpecs that are provided through various mechanisms and ensures that the containers described in those PodSpecs are running and healthy. The kubelet doesn’t manage containers which were not created by Kubernetes.

Kube proxy

kube-proxy maintains network rules on nodes. These network rules allow network communication to your Pods from network sessions inside or outside of your cluster.

Difference between node and pod in Kubernetes

Pod is the smallest unit of deployment in Kubernetes. Kubernetes doesn’t deploy containers straight away. Whenever Kubernetes have to deploy a container it is wrapped and deployed within a pod.

Node is a physical server or virtual machine which Kubernetes uses to deploy pods on.

Different types of Images

An Image, by definition, is essentially a visual representation of something that depicts or records visual perception. Images are classified in one of the three types.

- Binary Images

- Grayscale Images

- Color Images

Binary Images: This is the most basic type of image that exists. The only permissible pixel values in Binary images are 0(Black) and 1(White). Since only two values are required to define the image wholly, we only need one bit and hence binary images are also known as 1-Bit images.

Grayscale Images: Grayscale images are by definition, monochrome images. Monochrome images have only one color throughout and the intensity of each pixel is defined by the gray level it corresponds to. Generally, an 8-Bit image is the followed standard for grayscale images implying that there are 28= 256 grey levels in the image indexed from 0 to 255.

Color Images: Color images can be visualized by 3 color planes(Red, Green, Blue) stacked on top of each other. Each pixel in a specific plane contains the intensity value of the color of that plane. Each pixel in a color image is generally comprised of 24 Bits/pixel with 8 pixels contributing from each color plane.

ref: geeksforgeeks

Image type conversion from Color to Binary

Coloured image →Gray Scale image →Binary image

This can be done using different methods like → Adaptive Thresholding → Otsu’s Binarization → Local Maxima and Minima Method

Ref: medium

Sigmoid Kernel

The function sigmoid_kernel computes the sigmoid kernel between two vectors. The sigmoid kernel is also known as hyperbolic tangent, or Multilayer Perceptron.

from sklearn.metrics.pairwise import sigmoid_kernel

# tfv_matrix: vector

# https://scikit-learn.org/stable/modules/generated/sklearn.metrics.pairwise.sigmoid_kernel.html

sig = sigmoid_kernel(tfv_matrix, tfv_matrix)

Semantic Search

Semantic search is a data searching technique in a which a search query aims to not only find keywords, but to determine the intent and contextual meaning of the the words a person is using for search. Semantics refer to the philosophical study of meaning.

Mean, Median

Text classification

Approaches to automatic text classification can be grouped into three categories:

- Rule-based methods

- Machine learning (data-driven) based methods

- Hybrid methods

neural network architectures, such as models based on

- recurrent neural networks (RNNs),

- Convolutional neural networks (CNNs),

- Attention,

- Transformers,

- Capsule Nets

Different Optimization Algorithms in ANN

Optimizers are algorithms or methods used to change the attributes of your neural network such as weights and learning rate in order to reduce the losses. How you should change your weights or learning rates of your neural network to reduce the losses is defined by the optimizers you use. Optimization algorithms or strategies are responsible for reducing the losses and to provide the most accurate results possible.

Different types of optimizers:

- Gradient Descent (Batch Gradient Descent): performs a parameter update for entire training dataset.

- Stochastic Gradient Descent: performs a parameter update for each training example.

- Mini-Batch Gradient Descent: performs parameter update for every mini-batch of n training examples (batch size)

- Momentum: It is a method that helps accelerate SGD in the relevant direction and dampens oscillations by doing exponential weighted average.

- AdaGrad: It adapts the learning rate to the parameters.

- AdaDelta

- Adam

- RMSProp

If your input data is sparse, then you likely achieve the best results using one of the adaptive learning-rate methods. An additional benefit is that you won’t need to tune the learning rate but likely achieve the best results with the default value.

Adam is the best optimizers. If one wants to train the neural network in less time and more efficiently than Adam is the optimizer. For sparse data use the optimizers with dynamic learning rate.

If, want to use gradient descent algorithm than min-batch gradient descent is the best option.

See how each of these works:

Batch Size

- Batch Gradient Descent. Batch size is set to the total number of examples in the training dataset.

- Stochastic Gradient Descent. Batch size is set to one.

- Minibatch Gradient Descent. Batch size is set to more than one and less than the total number of examples in the training dataset.

Small Batch Size

- A smaller batch size reduces overfitting because it increases the noise in the training process.

- A smaller batch size can improve the generalization of the model.

If we use a small batch size, the optimizer will only see a small portion of the data during every cycle. This introduces noise in the training process because the gradient of the batch may take you in entirely different directions. However, on average, you will head towards a reasonable local minimum like you would using a larger batch size. Here is Jason Brownlee on “How to Control the Stability of Training Neural Networks With the Batch Size”:

Smaller batch sizes are noisy, offering a regularizing effect and lower generalization error.

Python’s built-in sorted() function

The built-in sorting algorithm of Python uses a special version of merge sort, called Timsort, which runs in O(n log n) on average and worst-case both.

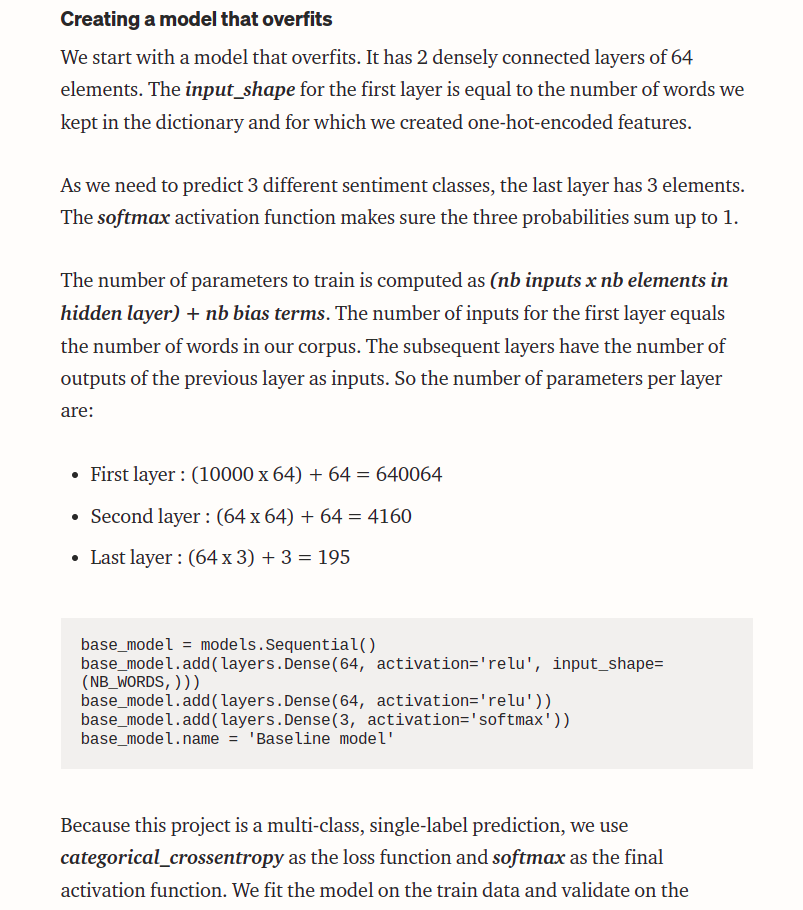

Multi-class Text Classification

- For multi-class classification: loss-function: categorical cross entropy (For binary classification: binary cross entropy loss).

- BERT: Take a pre-trained BERT model, add an untrained dense layer of neurons, train the layer for any downstream task, …

Backpropagation

The backward function contains the backpropagation algorithm, where the goal is to essentially minimize the loss with respect to our weights. In other words, the weights need to be updated in such a way that the loss decreases while the neural network is training (well, that is what we hope for). All this magic is possible with the gradient descent algorithm.

Activation function vs Loss function

An Activation function is a property of the neuron, a function of all the inputs from previous layers and its output, is the input for the next layer.

If we choose it to be linear, we know the entire network would be linear and would be able to distinguish only linear divisions of the space.

Thus we want it to be non-linear, the traditional choice of function (tanh / sigmoid) was rather arbitrary, as a way to introduce non-linearity.

One of the major advancements in deep learning, is using ReLu, that is easier to train and converges faster. but still - from a theoretical perspective, the only point of using it, is to introduce non-linearity. On the other hand, a Loss function, is the goal of your whole network.

it encapsulate what your model is trying to achieve, and this concept is more general than just Neural models.

A Loss function, is what you want to minimize, your error. Say you want to find the best line to fit a bunch of points:

D={(x1,y1),…,(x**n,y**n)}

\[D={(x1,y1),…,(x**n,y**n)}\]Your model (linear regression) would look like this:

y=mx+n

And you can choose several ways to measure your error (loss), for example L1:

or maybe go wild, and optimize for their harmonic loss:

Activation Functions

For a neural network to learn complex patterns, we need to ensure that the network can approximate any function, not only linear ones. This is why we call it “non-linearities.”

The way we do this is by using activation functions.

An interesting fact: the Universal approximation theorem states that, when using non-linear activation functions, we can turn a two-layer neural network into a universal function approximator. This is an excellent illustration of how powerful neural networks are.

Some of the most popular activation functions are sigmoid, and ReLU. Convolution operation is a linear operation without the activation functions.

Batch Inference vs Online Inference

Read here: https://mlinproduction.com/batch-inference-vs-online-inference/

t-SNE algorithm

(t-SNE) t-Distributed Stochastic Neighbor Embedding is a non-linear dimensionality reduction algorithm used for exploring high-dimensional data. It maps multi-dimensional data to two or more dimensions suitable for human observation.

Cross-Validation data

We should not use augmented data in cross validation dataset.

Hyper-parameter optimization techniques

- Grid Search

- Bayesian Optimization.

- Random Search

Normalization in ML

Normalizing helps keep the network weights near zero which in turn makes back-propagation more stable. Without normalization, networks will tend to fail to learn.

Why do call scheduler.step() in pytorch?

If you don’t call it, the learning rate won’t be changed and stays at the initial value.

Momentum and Learning rate dealing

If the LR is low, then momentum should be high and vice versa. The basic idea of momentum in ML is to increase the speed of training.

Momentum helps to know the direction of the next step with the knowledge of the previous steps. It helps to prevent oscillations. A typical choice of momentum is between 0.5 to 0.9.

YOLO

You only look once (YOLO) is SOTA real-time object detection system.

Object Recognition

Object classification + Object localization (bbox) = Object detection

Object classification + Object localization + Object detection = Object Recognition (Object detection)

mAP object detection

To evaluate object detection models like R-CNN and YOLO, the mean average precision (mAP) is used. The mAP compares the ground-truth bounding box to the detected box and returns a score. The higher the score, the more accurate the model is in its detections.

Semantic Segmentation: U-Net

Instance segmentation: Mask-RCNN (two-stage)

- In semantic segmentation, each pixel is assigned to an object category;

- In instance segmentation, each pixel is assigned to an individual object;

- The U-Net architecture can be used for semantic segmentation;

- The Mask R-CNN architecture can be used for instance segmentation.

Instance segmentation:

Existing methods in the literature are often divided into two groups, two-stage, and one-stage instance segmentation.

Two stage segmentation

Two-stage instance segmentation methods usually deal with the problem as a detection task followed by segmentation, they detect the bounding boxes of the objects in the first stage, and a binary segmentation is performed for each bounding box in the second stage.

Mask R-CNN (He et al., 2017), which is an extension for the Faster R-CNN, adds an additional branch that computes the mask to segment the objects and replaces RoI-Pooling with RoI-Align to improve the accuracy. Hence, the Mask R-CNN loss function combines the losses of the three branches; bounding box, recognized class, and the segmented mask.

Mask Scoring R-CNN (Huang et al., 2019) adds a mask-IoU branch to learn the quality of the predicted masks in order to improve the performance of the instance segmentation by producing more precise mask predictions. The mAP is improved from 37.1% to 38.3% compared to the Mask R-CNN on the COCO dataset.

PANet (Liu et al., 2018) is built upon the Mask R-CNN and FPN networks. It enhanced the extracted features in the lower layers by adding a bottom-up pathway augmentation to the FPN network in addition to proposing adaptive feature pooling to link the features grids and all feature levels. PANet achieved mAP of 40.0% on the COCO dataset, which is higher than the Mask R-CNN using the same backbone by 2.9% mAP.

Although the two-stage methods can achieve state-of-the-art performance, they are usually quite slow and can not be used for real-time applications. Using one TITAN GPU, Mask R-CNN runs at 8.6 fps, and PANet runs at 4.7 fps. Real-time instance segmentation usually requires running above 30 fps.

One stage segmentation

One-stage methods usually perform detection and segmentation directly.

InstanceFCN (Dai et al., 2016) uses FCN ( FCN — Fully Convolutional Network) to produce several instance-sensitive score maps that have information for the relative location, then object instances proposals are generated by using an assembling module.

YOLACT (Bolya et al., 2019a), which is one of the first attempts for real-time instance segmentation, consists of feature backbone followed by two parallel branches. The first branch generates multiple prototype masks, whereas the second branch computes mask coefficients for each object instance. After that, the prototypes and their corresponding mask coefficients are combined linearly, followed by cropping and threshold operations to generate the final object instances. YOLACT achieved mAP of 29.8% on the COCO dataset at 33.5 fps using Titan Xp GPU.

YOLACT++ (Bolya et al., 2019b) is an extension for YOLACT with several performance improvements while keeping it real-time. Authors utilized the same idea of the Mask Scoring R-CNN and added a fast mask re-scoring branch to assign scores to the predicted masks according to the IoU of the mask with ground-truth. Also, the 3x3 convolutions in specific layers are replaced with 3x3 deformable convolutions in the backbone network. Finally, they optimized the prediction head by using multi-scale anchors with different aspect ratios for each FPN level. YOLACT++ achieved 34.1% mAP (more than YOLACT by 4.3%) on the COCO dataset at 33.5 fps using Titan Xp GPU. However, the deformable convolution makes the network slower, as well as the upsampling blocks in YOLACT networks.

TensorMask (Chen et al., 2019) explored the dense sliding window instance segmentation paradigm by utilizing structured 4D tensors over the spatial domain. Also, tensor bipyramid and aligned representation are used to recover the spatial information in order to achieve better performance. However, these operations make the network slower than two-stage methods such as Mask R-CNN.

CenterMask (Lee & Park, 2020) decomposed the instance segmentation task into two parallel branches: Local Shape prediction branch, which is responsible for separating the instances, and Global Saliency branch to segment the image into a pixel-to-pixel manner. The branches are built upon a point representation layer containing the local shape information at the instance centers. The point representation is utilized from CenterNet (Zhou et al., 2019a) for object detection with a DLA-34 as a backbone. Finally, the outputs of both branches are grouped together to form the final instance masks. CenterMask achieved mAP of 33.1% on the COCO dataset at 25.2 fps using Titan Xp GPU. Although the one-stage methods run at a higher frame rate, the network speed is still an issue for real-time applications. The bottlenecks in the one-stage methods in the upsampling process in some methods, such as YOLACT.

Target Value Types

Categorical variables can be:

- Nominal

- Ordinal

- Cyclical

- Binary

Nominal variables are variables that have two or more categories which do not have any kind of order associated with them. For example, if gender is classified into two groups, i.e. male and female, it can be considered as a nominal variable.Ordinal variables, on the other hand, have “levels” or categories with a particular order associated with them. For example, an ordinal categorical variable can be a feature with three different levels: low, medium and high. Order is important.As far as definitions are concerned, we can also categorize categorical variables as binary, i.e., a categorical variable with only two categories. Some even talk about a type called “cyclic” for categorical variables. Cyclic variables are present in “cycles” for example, days in a week: Sunday, Monday, Tuesday, Wednesday, Thursday, Friday and Saturday. After Saturday, we have Sunday again. This is a cycle. Another example would be hours in a day if we consider them to be categories.

Autoencoder

An autoencoder is a type of artificial neural network used to learn efficient data codings in an unsupervised manner. The aim of an autoencoder is to learn a representation (encoding) for a set of data, typically for dimensionality reduction.

See visual representation and code in pytorch. A great notebook from cstoronto.

Difference between AutoEncoder(AE) and Variational AutoEncoder(VAE):

The key difference between and autoencoder and variational autoencoder is autoencoders learn a “compressed representation” of input (could be image,text sequence etc.) automatically by first compressing the input (encoder) and decompressing it back (decoder) to match the original input. The learning is aided by using distance function that quantifies the information loss that occurs from the lossy compression. So learning in an autoencoder is a form of unsupervised learning (or self-supervised as some refer to it) - there is no labeled data.

Instead of just learning a function representing the data ( a compressed representation) like autoencoders, variational autoencoders learn the parameters of a probability distribution representing the data. Since it learns to model the data, we can sample from the distribution and generate new input data samples. So it is a generative model like, for instance, GANs.

So, VAE are generative autoencoders, meaning they can generate new instances that look similar to original dataset used for training. VAE learns probability distribution of the data whereas autoencoders learns a function to map each input to a number and decoder learns the reverse mapping.

Why PyTorch?

PyTorch’s clear syntax, streamlined API, and easy debugging make it an excellent choice for introducing deep learning. PyTorch’s dynamic graph structure lets you experiment with every part of the model, meaning that the graph and its input can be modified during runtime. This is referred to as eager execution. It offers the programmer better access to the inner workings of the network than a static graph (TF) does, which considerably eases the process of debugging the code.

Want to make your own loss function? One that adapts over time or reacts to certain conditions? Maybe your own optimizer? Want to try something really weird like growing extra layers during training? Whatever - PyTorch is just here to crunch the numbers - you drive. [Ref: Ref: Deep Learning with PyTorch - Eli Stevens]

PyTorch vs NumPy

PyTorch is not the only library that deals with multidimensional arrays. NumPy is by far the most popular multidimensional array library, to the point that it has now arguably become the lingua franca of data science. PyTorch features seamless interoperability with NumPy, which brings with it first-class integration with the rest of the scientific libraries in Python, such as SciPy, Scikit-learn, and Pandas. Compared to NumPy arrays, PyTorch tensors have a few superpowers, such as the ability to perform very fast operations on graphical processing units (GPUs), distribute operations on multiple devices or machines, and keep track of the graph of computations that created them.

Frequently used terms in ML

Feature engineering

Features are transformations on input data that facilitate a downstream algorithm, like a classifier, to produce correct outcomes on new data. Feature engineering consists of coming up with the right transformations so that the downstream algorithm can solve a task. For instance, in order to tell ones from zeros in images of handwritten digits, we would come up with a set of filters to estimate the direction of edges over the image, and then train a classifier to predict the correct digit given a distribution of edge directions. Another useful feature could be the number of enclosed holes, as seen in a zero, an eight, and, particularly, loopy twos. Read this article.

Tensor

Tensor is multidimensional arrays similar to NumPy arrays.

ImageNet

ImageNet dataset (http://imagenet.stanford.edu). ImageNet is a very large dataset of over 14 million images maintained by Stanford University. All of the images are labeled with a hierarchy of nouns that come from the WordNet dataset (http://wordnet.princeton.edu), which is in turn a large lexical database of the English language.

Embedding

An embedding is a relatively low-dimensional space into which you can translate high-dimensional vectors. The embedding in machine learning or NLP is actually a technique mapping from words to vectors which you can do better analysis or relating, for example, “toyota” or “honda” can be hardly related in words, but in vector space it can be set to very close according to some measure, also you can strengthen the relation ship of word by setting: king-man+woman = Queen. So we can set boy to (1,0) and then set girl to (-1,0) to show they are in the same dimension but the meaning is just opposite.

Baseline

A baseline is the result of a very basic model/solution. You generally create a baseline and then try to make more complex solutions in order to get a better result. If you achieve a better score than the baseline, it is good.

Benchmarking

It a process of measuring the performance of a company’s products, services, or processes against those of another business considered to be the best in the industry, aka “best in class.” The point of benchmarking is to identify internal opportunities for improvement. The same concept applies for the ML use cases as well. For example, It’s a tool, comparing how well one ML method does at performing a specific task compared to another ML method which is already known as the best in that category.

Bands and Modes of Image

An image can consist of one or more bands of data. The Python Imaging Library allows you to store several bands in a single image, provided they all have the same dimensions and depth. For example, a PNG image might have ‘R’, ‘G’, ‘B’, and ‘A’ bands for the red, green, blue, and alpha transparency values. Many operations act on each band separately, e.g., histograms. It is often useful to think of each pixel as having one value per band.

The mode of an image defines the type and depth of a pixel in the image. The current release supports the following standard modes: Read

Mixed-Precision

Mixed precision is the use of both 16-bit and 32-bit floating-point types in a model during training to make it run faster and use less memory. By keeping certain parts of the model in the 32-bit types for numeric stability, the model will have a lower step time and train equally as well in terms of the evaluation metrics such as accuracy.

Hyperparameters

With neural networks, you’re usually working with hyperparameters once the data is formatted correctly. A hyperparameter is a parameter whose value is set before the learning process begins. It determines how a network is trained and the structure of the network. Few hyperparameter example:

- Number of hidden layers in the network

- Number of hidden units for each hidden layer

- Learning rate

- Activation function for different layers

- Momentum

- Learning rate decay.

- Mini-batch size.

- Dropout rate (if we use any dropout layer)

- Number of epochs

Quantization

Quantization that realizes speeding up and memory saving by replacing the operations of the neural network mainly on floating point operations with integer operations.

This is the most easy way to make inference from trained models which reduce operation costs, reduce calculation loads, and reduce memory consumption.

Methods for finding out Hyperparameters

-

Manual Search

-

Grid Search (http://machinelearningmastery.com/grid-search-hyperparameters-deep-learning-models-python-keras/)

In scikit-learn there is a

from sklearn.model_selection import GridSearchCVclass to find the best parameters using GridSearch.from sklearn.model_selection import GridSearchCV model = KerasClassifier(build_fn=create_model, epochs=100, batch_size=10, verbose=0) optimizer = ['SGD', 'RMSprop', 'Adagrad', 'Adadelta', 'Adam', 'Adamax', 'Nadam'] param_grid = dict(optimizer=optimizer) grid = GridSearchCV(estimator=model, param_grid=param_grid, n_jobs=-1, cv=3)We can’t use the gridsearch directly with PyTorch, but there is a library which is called skorch. Using skorch, we can use the sklearns’s gridsearch with PyTorch models.

-

Random Search

In scikit-learn there is a Class from sklearn.model_selection import RandomizedSearchCV which we can use to do random search. We can’t use the random search directly with PyTorch, but there is a library which is called skorch. Using skorch, we can use the sklearn’s RandomizedSearchCV with PyTorch models.

Read more …

-

Bayesian Optimization

There are different libraries for searching hyperparameter, for example: optuna, hypersearch. gridsearchCV in sklearn etc.

If your machine learning model is 99% correct, what are the possible wrong things happened?

- Overfitting.

- Wrong evaluation metric

- Bad validation set

- Leakage: you’re accidentally using 100% of the training set as your test set.

- Extreme class imbalance (with, say, 98% in one class) combined with the accuracy metric or a feature that leaks the target.

GAN

GAN, where two networks, one acting as the painter and the other as the art historian, compete to outsmart each other at creating and detecting forgeries. GAN stands for generative adversarial network, where generative means something is being created (in this case, fake masterpieces), adversarial means the two networks are competing to outsmart the other, and well, network is pretty obvious. These networks are one of the most original outcomes of recent deep learning research. Remember that our overarching goal is to produce synthetic examples of a class of images that cannot be recognized as fake. When mixed in with legitimate examples, a skilled examiner would have trouble determining which ones are real and which are our forgeries.